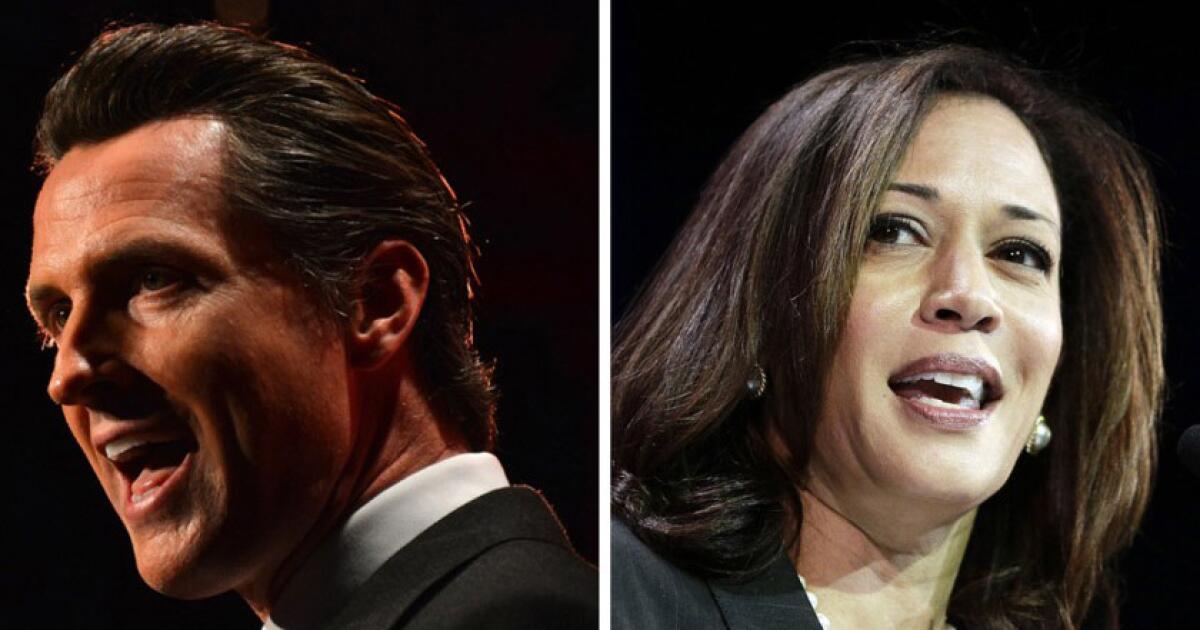

California lawmakers want Gov. Gavin Newsom to o.k. bills they passed that purpose to make artificial intelligence chatbots safer. But arsenic the politician weighs whether to motion the authorities into law, he faces a acquainted hurdle: objections from tech companies that opportunity caller restrictions would inhibit innovation.

Californian companies are world leaders successful AI and person spent hundreds of billions of dollars to enactment up successful the title to create the about powerful chatbots. The accelerated gait has alarmed parents and lawmakers worried that chatbots are harming the intelligence wellness of children by exposing them to self-harm contented and different risks.

Parents who allege chatbots encouraged their teens to harm themselves earlier they died by termination person sued tech companies specified arsenic OpenAI, Character Technologies and Google. They’ve besides pushed for much guardrails.

Calls for much AI regularisation person reverberated passim the nation’s superior and various states. Even arsenic the Trump administration’s “AI Action Plan” proposes to trim reddish portion to promote AI development, lawmakers and regulators from some parties are tackling kid information concerns surrounding chatbots that reply questions aliases enactment arsenic integer companions.

California lawmakers this period passed 2 AI chatbot information bills that the tech manufacture lobbied against. Newsom has until mid-October to o.k. aliases cull them.

The high-stakes determination puts the politician successful a tricky spot. Politicians and tech companies alike want to guarantee the nationalist they’re protecting young people. At the aforesaid time, tech companies are trying to grow the usage of chatbots successful classrooms and person opposed caller restrictions they opportunity spell excessively far.

Suicide prevention and situation counseling resources

If you aliases personification you cognize is struggling pinch suicidal thoughts, activity thief from a master and telephone 9-8-8. The United States’ first nationwide three-digit intelligence wellness situation hotline 988 will link callers pinch trained intelligence wellness counselors. Text “HOME” to 741741 successful the U.S. and Canada to scope the Crisis Text Line.

Meanwhile, if Newsom runs for president successful 2028, he mightiness request much financial support from able tech entrepreneurs. On Sept. 22, Newsom promoted the state’s partnerships pinch tech companies connected AI efforts and touted really the tech manufacture has fueled California’s economy, calling the authorities the “epicenter of American innovation.”

He has vetoed AI information authorities successful the past, including a bill past twelvemonth that divided Silicon Valley’s tech manufacture because the politician thought it gave the nationalist a “false consciousness of security.” But he besides signaled that he’s trying to onslaught a equilibrium betwixt addressing information concerns and ensuring California tech companies proceed to predominate successful AI.

“We person a consciousness of work and accountability to lead, truthful we support risk-taking, but not recklessness,” Newsom said astatine a discussion pinch erstwhile President Clinton astatine a Clinton Global Initiative arena connected Wednesday.

Two bills sent to the politician — Assembly Bill 1064 and Senate Bill 243 — purpose to make AI chatbots safer but look stiff guidance from the tech industry. It’s unclear if the politician will motion some bills. His agency declined to comment.

AB 1064 bars a person, business and different entity from making companion chatbots disposable to a California resident nether the property of 18 unless the chatbot isn’t “foreseeably capable” of harmful behaviour specified arsenic encouraging a kid to prosecute successful self-harm, unit aliases disordered eating.

SB 243 requires operators of companion chatbots to notify definite users that the virtual assistants aren’t human.

Under the bill, chatbot operators would person to person procedures to forestall the accumulation of termination aliases self-harm contented and put successful guardrails, specified arsenic referring users to a termination hotline aliases situation matter line.

They would beryllium required to notify insignificant users astatine slightest each 3 hours to return a break, and that the chatbot is not human. Operators would besides beryllium required to instrumentality “reasonable measures” to forestall companion chatbots from generating sexually definitive content.

Tech lobbying group TechNet, whose members see OpenAI, Meta, Google and others, said successful a connection that it “agrees pinch the intent of the bills” but remains opposed to them.

AB 1064 “imposes vague and unworkable restrictions that create sweeping ineligible risks, while cutting students disconnected from valuable AI learning tools,” said Robert Boykin, TechNet’s executive head for California and the Southwest, successful a statement. “SB 243 establishes clearer rules without blocking access, but we proceed to person concerns pinch its approach.”

A spokesperson for Meta said the institution has “concerns about the unintended consequences that measures for illustration AB 1064 would have.” The tech institution launched a caller Super PAC to combat authorities AI regularisation that the institution thinks is excessively burdensome, and is pushing for much parental power complete really kids usage AI, Axios reported connected Tuesday.

Opponents led by the Computer & Communications Industry Assn. lobbied aggressively against AB 1064, stating it would frighten invention and disadvantage California companies that would look much lawsuits and person to determine if they wanted to proceed operating successful the state.

Advocacy groups, including Common Sense Media, a nonprofit that sponsored AB 1064 and recommends that minors shouldn’t usage AI companions, are urging Newsom to motion the measure into law. California Atty. Gen. Rob Bonta besides supports the bill.

The Electronic Frontier Foundation said SB 243 is excessively wide and would tally into free-speech issues.

Several groups, including Common Sense Media and Tech Oversight California, removed their support for SB 243 aft changes were made to the bill, which they said weakened protections. Some of the changes constricted who receives definite notifications and included exemptions for definite chatbots successful video games and virtual assistants utilized successful smart speakers.

Lawmakers who introduced chatbot information authorities want the politician to motion some bills, arguing that they could some “work successful harmony.”

Sen. Steve Padilla (D-Chula Vista), who introduced SB 243, said that moreover pinch the changes he still thinks the caller rules will make AI safer.

“We’ve sewage a exertion that has awesome imaginable for good, is incredibly powerful, but is evolving incredibly rapidly, and we can’t miss a model to supply commonsense guardrails present to protect folks,” he said. “I’m happy pinch wherever the measure is at.”

Assemblymember Rebecca Bauer-Kahan (D-Orinda), who co-wrote AB 1064, said her measure balances the benefits of AI while safeguarding against the dangers.

“We want to make judge that erstwhile kids are engaging pinch immoderate chatbot that it is not creating an unhealthy affectional attachment, guiding them towards suicide, disordered eating, immoderate of the things that we cognize are harmful for children,” she said.

During the legislative session, lawmakers heard from grieving parents who mislaid their children. AB 1064 highlights 2 high-profile lawsuits: 1 against San Francisco ChatGPT shaper OpenAI and different against Character Technologies, the developer of chatbot level Character.AI.

Character.AI is simply a level wherever group could create and interact pinch integer characters that mimic existent and fictional people. Last year, Florida mom Megan Garcia alleged successful a national suit that Character.AI’s chatbots harmed the intelligence wellness of her boy Sewell Setzer III and accused the institution of failing to notify her aliases connection thief erstwhile he expressed suicidal thoughts to virtual characters.

More families sued the institution this year. A Character.AI spokesperson said they attraction very profoundly about personification information and “encourage lawmakers to appropriately trade laws that beforehand personification information while besides allowing capable abstraction for invention and free expression.”

In August, the California parents of Adam Raine sued OpenAI, alleging that ChatGPT provided the teen accusation about termination methods, including the 1 the teen utilized to termination himself.

OpenAI said it’s strengthening safeguards and plans to merchandise parental controls. Its main executive, Sam Altman, wrote successful a September blog station that the institution believes minors request “significant protections” and the institution prioritizes “safety up of privateness and state for teens.” The institution declined to remark connected the California AI chatbot bills.

To California lawmakers, the timepiece is ticking.

“We’re doing our best,” Bauer-Kahan said. “The truth that we’ve already seen kids suffer their lives to AI tells maine we’re not moving accelerated enough.”